Some insights from working with event-cameras for Human Pose Estimation

As I write, my paper about event-cameras and human-pose estimation has been accepted at the Computer Vision and Pattern Recognition workshop on event-cameras1. If you do not know what an event-camera is, I wrote about them a brief introduction. Instead, in this post I just want to share some advice I found useful during my research, hopefully to inspire curiosity in other researchers, practitioners, and entrepreneurs.

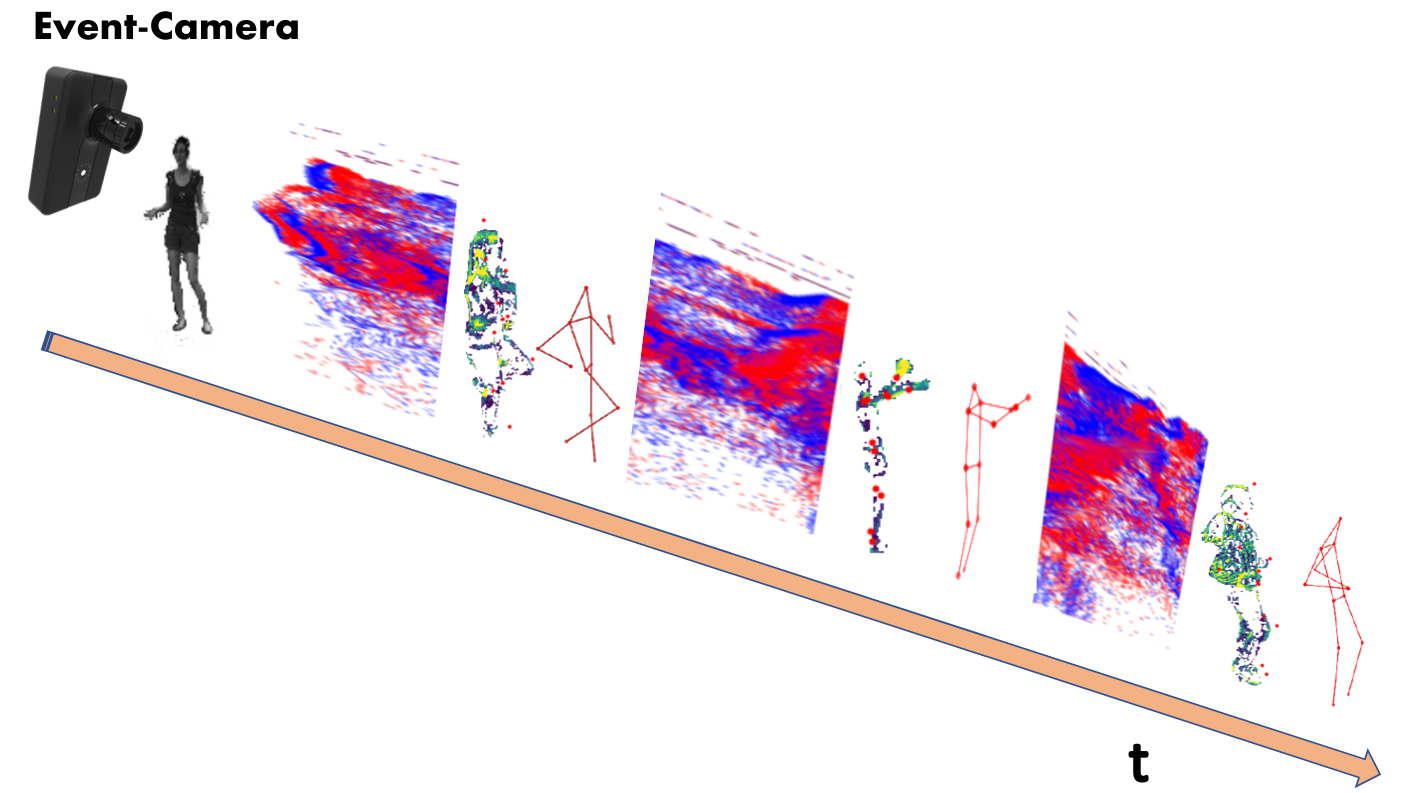

The problem: from a moving subject to skeleton estimation

Open-source research

I’m convinced that Computer vision researchers and practitionares should direct each other to increase the state-of-the-art in meaningful ways. To reach such a goal, researchers should take a step down the ivy-tower. How? I believe the first steps are writing good-quality code and summarizing paper for the general public.

Open-source doesn’t mean low-quality. Event-based vision is novel, very novel; the opencv for event-cameras doesn’t exist yet, although some are working onto it. The Robotics and Perception Group (the university of Zurich and ETH Zurich) is collecting papers implementation on their github page. Prophesee—a private company focused on event-vision—already has a SDK for their event-cameras, but the software is limited to its products.

As an attempt, I developed my own tiny library with some tools for event-cameras. You can find it at https://tinyurl.com/vy6zjdkz.

Event-synthesis

Data are the fuel of novel Deep Learning models. Continuing with the analogy to oil, data are also extremely expensive to extract and elaborate. In particular, datasets recorded with event-cameras are not comparable in size and variance to the large RGB datasets. For these reasons, event synthesis is amazing. Recent literature provides interesting results about generate synthetic events from RGB images or videos (Rebecq et al. 2019).

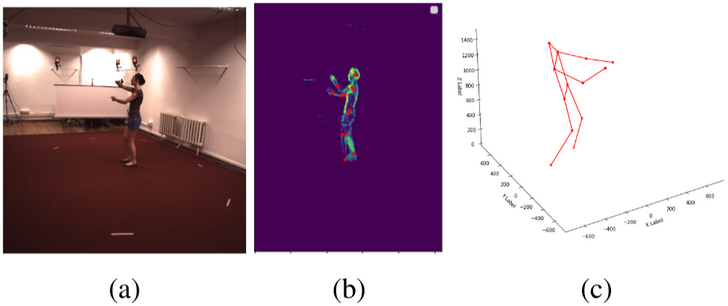

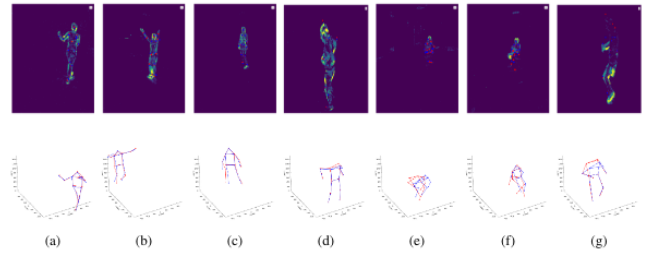

I used my event-library to generate synthetic events from a millions of RGB frames of the standard Human3.6m dataset (Ionescu et al. 2014).

Syntehtic events allow to recycle standard RGB datasets and test algorithms without using an event-camera

I find this especially intriguing for entrepreneurs and engineers in automotive and time-critical applications. Companies can convert their own data to synthetic events in order to evaluate event-based algorithms before event acquiring event-cameras (which are still expensive, although major companies entering the field will lower costs).

My research – what about Human Pose Estimation?

Only in code veritas.

You have certainly appreciated the results of “Human Pose Estimation” (also called “Motion Capture”) techniques. They are exploited in Star Wars, Avatar, and major video-games to produce amazing special effect (some examples https://www.youtube.com/watch?v=bkvW9hsypHw).

Motion Capture systems are based on specialize hardware and a large number of synchronized cameras. In this conditions, motions at high speed are hard to capture. My research looks for an answer to a common problem in these applications: elaborating data at high speed.

Motion Capture with an event-camera

Event-cameras record rapid movements efficiently. These devices could be disruptive in motion capture industries, but first there are some major issues to tackle:

- First, static parts of the body generate no events (as in fig. above). This is a major issue, as no information means lower reconstruction accuracy. In this case, event-cameras needs some backup from standard RGB cameras

- Second, smarter techniques should be developed to leverage the uniqueness of events. In my previous post about event-cameras, I explore recent event-by-event and group-of-events approaches (Gallego et al. 2020).

Bibliography

Gallego, Guillermo, Tobi Delbruck, Garrick Michael Orchard, Chiara Bartolozzi, Brian Taba, Andrea Censi, Stefan Leutenegger, et al. 2020. “Event-Based Vision: A Survey.” IEEE Transactions on Pattern Analysis and Machine Intelligence. Institute of Electrical and Electronics Engineers (IEEE), 1. http://dx.doi.org/10.1109/TPAMI.2020.3008413.

Ionescu, Catalin, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. 2014. “Human3.6m: Large Scale Datasets and Predictive Methods for 3d Human Sensing in Natural Environments.” IEEE Transactions on Pattern Analysis and Machine Intelligence 36 (7):1325–39. https://doi.org/10.1109/tpami.2013.248.

Rebecq, Henri, René Ranftl, Vladlen Koltun, and Davide Scaramuzza. 2019. “High Speed and High Dynamic Range Video with an Event Camera.” IEEE Trans. Pattern Anal. Mach. Intell. (T-PAMI). http://rpg.ifi.uzh.ch/docs/TPAMI19%5FRebecq.pdf.